I’ve been asked on twitter about “foundational readings” in the physics of software, besides my papers.

That’s either an easy question or a very hard question, depending on how you want to answer.

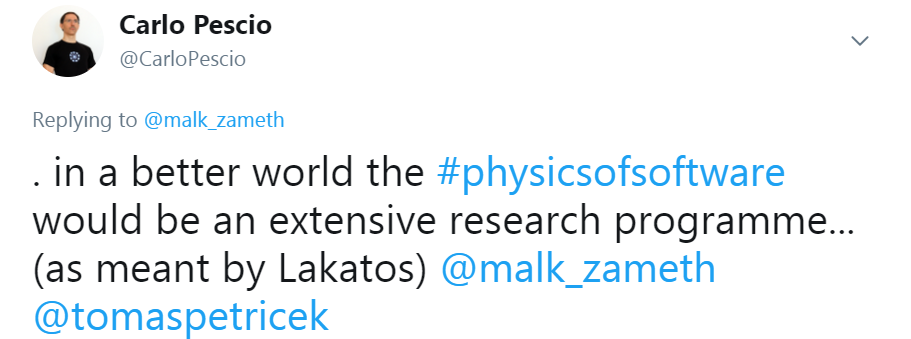

On one side, I pretty much came up with all the ideas in what I called “the physics of software”. So I could say that beyond the papers on this website, on carlopescio.com, my talks, random notes like this one, and a few conversations in the forum, there is nothing else to read. Well, there is in fact a talk by Jérémie Chassaing on the Thermodynamics of Software which is inspired by the general conceptual framework of the physics of software (watch it on youtube), but that would be it.

That’s not a great answer though. One may want to investigate things on their own, and of course I based my reasoning on previous knowledge, so there has to be some foundational reading somewhere. Strictly speaking, it would not be about “the physics of software”, but about notions that are relevant for the physics of software. In fact, I have hundreds of those works filed under “references”.

Listing hundreds of papers / books isn’t helpful either, so I’ve put together this list (some are papers / books, but some are just topics). In many cases, you would find references and links to more specific works inside my papers / posts. I’ve classified the relevant works in a few categories, which should be self-describing enough: General knowledge; Inspirational; Failed attempts; Decision Space; Artifact Space; Run-Time space.

General knowledge:

A working knowledge of math, science, engineering, computer science / formal methods, software design / programming is needed. Some epistemology would help. Some psychology helps too; I’ve found the Gestalt approach (particularly the sub-field of Productive Thinking) quite useful.

Information theory: classical entropy-centric stuff by Shannon (“A Mathematical Model of Communications”), but also Kolgomorov Complexity and the Constructor Theory perspective on information; generally speaking, learning about Constructor Theory would provide an interesting point of view.

Symmetry in software: there are many works by Jim Coplien, and Liping Zhao, like “Symmetry Breaking in Software Patterns”.

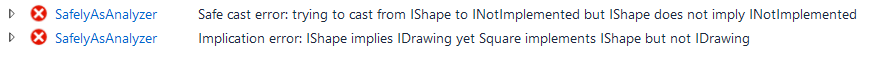

Learning about Intensional vs. Extensional definitions, Nominative vs. Structural type systems, etc. will help. These should be covered in a master-level CS education, but I’m a bit out of touch with what is being taught today.

It would also help to have some understanding of theory building, the role of a scientific theory, the history of science.

Inspirational Works

Many early ideas came from reading Christopher Alexander’s works, more exactly:

Early ideas on software as executable knowledge came from Phillip Armour’s “The Laws of Software Process”.

More recently, a few unpublished ideas came from “Concepts of Force” by Jammer.

Failed Attempts

One could argue that the most prominent failed attempt is mine : ), but along with that, I would list:

A couple of CS papers that are relevant if we want to avoid mistakes made while defining metrics are “Property-based software engineering measurement” by Briand / Morasca / Basili and "Software Measurement: A Necessary Scientific Basis” by Fenton.

Decision space

There isn’t much on decisions that is somewhat aligned with their model in the decision space. Sure, one has to learn Conway’s law and a few other “laws”. Reading “Systemantics” by Jon Gall would also help. A different model, but with some points of contact, is being carried out by Mark Burgess as part of Promise Theory, which would not hurt to read about.

Conceptual spaces are also explored in “The Geometry of Meaning: Semantics Based on Conceptual Spaces” by Peter Gärdenfors, which I haven’t fully read yet : )

Artifact space

Here, to be fair, there isn’t much that closely resembles my perspective. I would suggest to read the early papers on coupling and cohesion, also to appreciate how different those were from what we’re hearing now. Connascence (Meilir Page-Jones ) is close enough to entanglement (which however I consider a better concept) and it would deserve to be known.

Generally speaking, understanding principles, patterns etc. would help, not in the sense that they form the physics of software, but that deep under many principles we do have phenomena in the physics of software. Reading about power laws and how they manifest in software is also useful.

Reading the rationale papers / books on programming language design helps too. Languages ultimately influence the way we structure our artifacts, and understanding the design choice of language designers helps quite a bit. I would also suggest to investigate unpopular paradigms (like AOP) which offer affordances not usually found in mainstream programming.

Run-Time space

I have left many references in my blog (especially when talking about distance and friction), so I won’t repeat them here. Generally speaking, understanding CPU/computer architecture, cache/memory architecture, known laws like the Amdahl law and the CAP Theorem is necessary to get a grip on the run-time space.

This is not a definitive list, and any suggestion is welcome. Remember: it’s not about being a classic, it’s about being relevant for someone learning the physics of software.

That’s either an easy question or a very hard question, depending on how you want to answer.

On one side, I pretty much came up with all the ideas in what I called “the physics of software”. So I could say that beyond the papers on this website, on carlopescio.com, my talks, random notes like this one, and a few conversations in the forum, there is nothing else to read. Well, there is in fact a talk by Jérémie Chassaing on the Thermodynamics of Software which is inspired by the general conceptual framework of the physics of software (watch it on youtube), but that would be it.

That’s not a great answer though. One may want to investigate things on their own, and of course I based my reasoning on previous knowledge, so there has to be some foundational reading somewhere. Strictly speaking, it would not be about “the physics of software”, but about notions that are relevant for the physics of software. In fact, I have hundreds of those works filed under “references”.

Listing hundreds of papers / books isn’t helpful either, so I’ve put together this list (some are papers / books, but some are just topics). In many cases, you would find references and links to more specific works inside my papers / posts. I’ve classified the relevant works in a few categories, which should be self-describing enough: General knowledge; Inspirational; Failed attempts; Decision Space; Artifact Space; Run-Time space.

General knowledge:

A working knowledge of math, science, engineering, computer science / formal methods, software design / programming is needed. Some epistemology would help. Some psychology helps too; I’ve found the Gestalt approach (particularly the sub-field of Productive Thinking) quite useful.

Information theory: classical entropy-centric stuff by Shannon (“A Mathematical Model of Communications”), but also Kolgomorov Complexity and the Constructor Theory perspective on information; generally speaking, learning about Constructor Theory would provide an interesting point of view.

Symmetry in software: there are many works by Jim Coplien, and Liping Zhao, like “Symmetry Breaking in Software Patterns”.

Learning about Intensional vs. Extensional definitions, Nominative vs. Structural type systems, etc. will help. These should be covered in a master-level CS education, but I’m a bit out of touch with what is being taught today.

It would also help to have some understanding of theory building, the role of a scientific theory, the history of science.

Inspirational Works

Many early ideas came from reading Christopher Alexander’s works, more exactly:

- Notes on the Synthesis of Form (this is also the book that inspired Coupling / Cohesion back in the 70s)

- The Nature of Order

- Harmony-Seeking Computations

Early ideas on software as executable knowledge came from Phillip Armour’s “The Laws of Software Process”.

More recently, a few unpublished ideas came from “Concepts of Force” by Jammer.

Failed Attempts

One could argue that the most prominent failed attempt is mine : ), but along with that, I would list:

- “software science” by Halstead

- Most of the literature on metrics

A couple of CS papers that are relevant if we want to avoid mistakes made while defining metrics are “Property-based software engineering measurement” by Briand / Morasca / Basili and "Software Measurement: A Necessary Scientific Basis” by Fenton.

Decision space

There isn’t much on decisions that is somewhat aligned with their model in the decision space. Sure, one has to learn Conway’s law and a few other “laws”. Reading “Systemantics” by Jon Gall would also help. A different model, but with some points of contact, is being carried out by Mark Burgess as part of Promise Theory, which would not hurt to read about.

Conceptual spaces are also explored in “The Geometry of Meaning: Semantics Based on Conceptual Spaces” by Peter Gärdenfors, which I haven’t fully read yet : )

Artifact space

Here, to be fair, there isn’t much that closely resembles my perspective. I would suggest to read the early papers on coupling and cohesion, also to appreciate how different those were from what we’re hearing now. Connascence (Meilir Page-Jones ) is close enough to entanglement (which however I consider a better concept) and it would deserve to be known.

Generally speaking, understanding principles, patterns etc. would help, not in the sense that they form the physics of software, but that deep under many principles we do have phenomena in the physics of software. Reading about power laws and how they manifest in software is also useful.

Reading the rationale papers / books on programming language design helps too. Languages ultimately influence the way we structure our artifacts, and understanding the design choice of language designers helps quite a bit. I would also suggest to investigate unpopular paradigms (like AOP) which offer affordances not usually found in mainstream programming.

Run-Time space

I have left many references in my blog (especially when talking about distance and friction), so I won’t repeat them here. Generally speaking, understanding CPU/computer architecture, cache/memory architecture, known laws like the Amdahl law and the CAP Theorem is necessary to get a grip on the run-time space.

This is not a definitive list, and any suggestion is welcome. Remember: it’s not about being a classic, it’s about being relevant for someone learning the physics of software.

RSS Feed

RSS Feed